Hello everyone

Nice to be here.

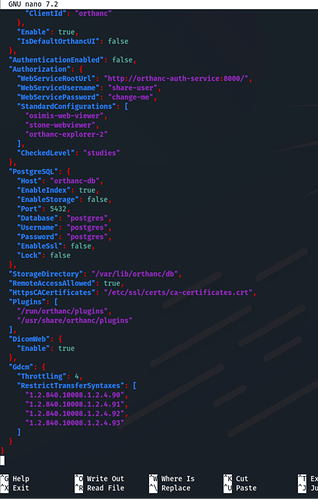

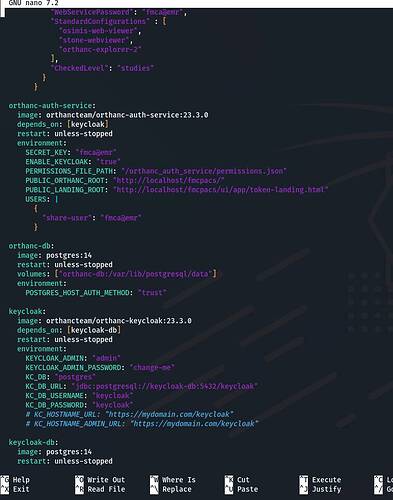

Ok i started usujg orthanc and i really enjoyed it. However i wanted more with user control. I have orthanc running with keycloak authentication in a docker environment.But i have issues locating the orthan.json file with the config where i can change the AET AND also add modalities as well as change the transingesting code.here is what i can only see in the .json file. Have been searching through the docker container but no way out still… Attached is the .json file

I don’t know what package you are using , but with the Docker images the *.json config files are usually located in /etc/orthanc in the Docker container itself. If you want to customize the configuration there are a number of ways to do that, some of which are described here:

docker-osimis

You can also bind or copy a *.json file to the Docker Container from the host. If you are dynamically changing settings during development it is a bit more convenient to use a .json file because you can make changes and just restart the container without having to rebuild to see the changes.

Orthanc I think dynamically creates a generated configuration file that is stored in:

/tmp/orthanc.json

If you don’t specify any configuration files or settings yourself it probably just uses the defaults.

/sds

Thanks scotti

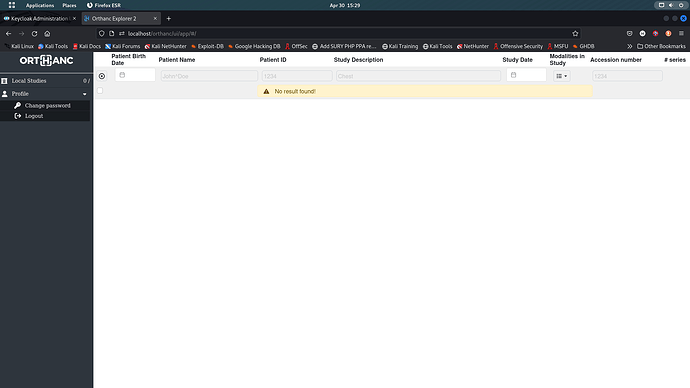

Have been able to get the configuration files and the server up and running. How ever am experiencing difficulties with receiving dicom transfers from another running orthanc peer… i intend to make the osimis with keycloak as the main server while the other orthanc peer as the client viewer server . So here are my issues

-

Am able to send from the main server to the client viewer ( another orthanc peer without the key cloak authentication) how ever am unable to send back from the peer to the main…

-

Also the main server is unable to recieve dicom images from other connected modalities. Such as ct and fluroscopy.

I really dont kniw whats blocking the connection

That gets to be a little complicated. There is a difference between using the DICOM protocol and Peering.

“The Book” is well documented:

There are sections there about Peering also.

The default config file: Default Config File

has detailed comments about the configuration options.

You probably need to verify that you have connectivity and that your “DicomModalities” and “OrthancPeers” are configured correctly and with the correct permissions. There is an option to store those in a DB as well.

Troubleshooting DICOM connections can be problematic because the AET, Port, IP, etc. have to be setup correctly, and you can configure TLS or not, and there might be firewalls that are blocking connections.

There are some settings that are more ‘permissive’ for DICOM connections. Those can be helpful when troubleshooting.

/sds

Thanks once again scotti and am so sorry to bother you again

the AET, IP and PORTs are all good. I had previously set up 2 orthanc servers peered to one another and it worked perfectly well. Do keycloak authentication blocking connections for orthanc peers?

I don’t have that much experience using KeyCloak yet. The “orthanc-team” does maintain a GitHub repository here with some examples, including with KeyCloak. You should be able to determine if you have “connectivity” using OE2 interface. Are you using that package or did you just setup KeyCloak on your own ?

orthanc-auth-service

/sds

Thanks scotti

Yes am using that package. It was released some couple of weeks ago. Have gone through the Read.me file still cant find anything anywhere as regards the peering.

Hello,

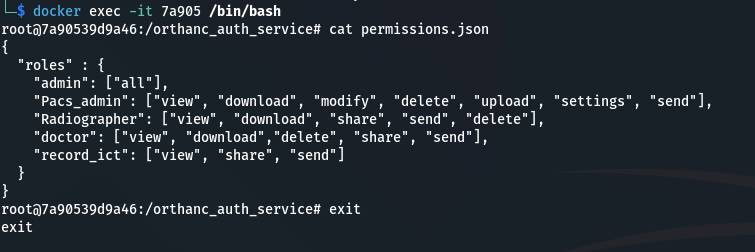

Have you defined a permissions.json file ?

See more here:

Thanks @bcrickboom

Yes have defined the permissions.json file… see attached image for refrence.

Only that i couldn’t locate the directory part for this " Authorization.Permissions" as in step 3… asides that i followed all the steps required…

See docker compose file codes here.

I might just start a new thread, but this is a somewhat related question regarding Med Dream integration and a general point about using an Orthanc Instance to handle API requests and other tasks (i.e. Viewer, DicomWeb, etc) and another one to serve mostly as a the ‘main’ DICOM server / PACS. That adds a bit of overhead but sort of separates tasks and might improve performance in some ways.

In a few of the setups in the referenced repo (the MedDream one in particular with their custom python script), you have a dedicated orthanc instance to handle API requests. It shared the Index DB and the storage with the ‘main’ orthanc. Just curious if that is ‘recommended’ with the MedDream setup, and if that is not a bad practice in general if a system is making extensive use of API requests in addition to the DICOM interface ?

e.g. and a slightly different setup in another example.

# An orthanc dedicated for the MedDream viewer. It has no access control since it

# is accessible only from inside the Docker network

orthanc-for-meddream:

image: osimis/orthanc:23.2.0

volumes:

- orthanc-storage:/var/lib/orthanc/db

- ./demo-setup/meddream/meddream-plugin.py:/scripts/meddream-plugin.py

depends_on: [orthanc-db]

restart: unless-stopped

env_file:

- ./demo-setup/common-orthanc.env

environment:

ORTHANC__NAME: "Orthanc for MedDream"

VERBOSE_ENABLED: "true"

VERBOSE_STARTUP: "true"

ORTHANC__PYTHON_SCRIPT: "/scripts/meddream-plugin.py"

ORTHANC__AUTHENTICATION_ENABLED: "false"

@sdscotti

Apologies for the late response.

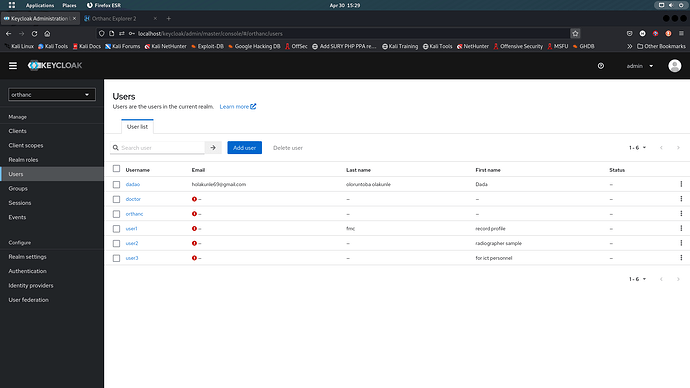

Have been able to figure out the permission and roles issues i earler raised. Just had to properly define the permissions.json right and mapped them to the roles as described in the keyload manager…

As regards your question. It goes both ways. But personally i wont recommend that…for production i always keep a client side orthanc with ohif in some cases. Honestly its a bit of overhead only that i had to set up a dicom router and included a load balancer… but it all depends on the overall design of what you want to achieve.