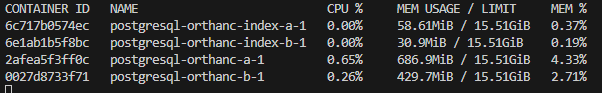

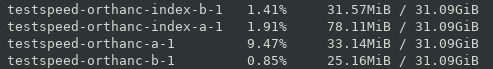

I have a moderately complex setup running in Docker containers.

At this point, I am not looking for answers to my current problem, but suggestions for how I might debug and approach the issue.

Currently, I am seeing CPU usage gradually increasing over a few days after receiving and/or anonymizing some moderately sized exams (10-20k images). The activity does not correspond to any ongoing script activity, user activity, or entries in the logs.

This is with Orthanc versions 1.12.0 and 1.11.3 both running the python plugin 4.0.

Running strace on the active Orthanc process produces repeating entries every 100ms similar to:

clock_nanclock_nanosleep(CLOCK_REALTIME, 0, {tv_sec=0, tv_nsec=100000000}, NULL) = 0

Given the complexity of my setup, there are several possible avenues I could explore to track down this CPU usage. At this point, I am wondering about the OnChange script I wrote and registered using the Python script.

I only make use ofthe STABLE_STUDY trigger, skipping any processing for any other triggers. I would not think that any other events passing through my code would create a cpu spike.

I do wonder about events listed at the NEW_INSTANCE level. Is it possible that the event generation of the NEW_INSTANCE for these 10-20k instance studies might arrive faster than can be acknowledged by my “ignore anything but stable study triggers” OnChange routine? This would imply that the system was able to write new instances to disk faster than it can process the events they generate.

Are these signals on the back end generated and sent for processing immediately? Or queued in some way? I mean before the signal even reaches my own OnChange routine. Is there some kind of rate limiter on the rate at which events might arrive?

I do not even know if the strace output is helpful. I am going to turn on the detailed/debug info in the logs to try to see if anything in the log corresponds to the CPU usage. If that doesn’t work, I will need to build a very simple test case setup and try to duplicate the problem.

Thanks for suggestions,

John.