Hi OrthanC community,

Since a couple of weeks when receiving images from the MR are coming up incomplete. Only the first couple of images are registered.

In Osirix, images are received without issue (from MR). Resending images from Osirix to OrthanC shows up same issue.

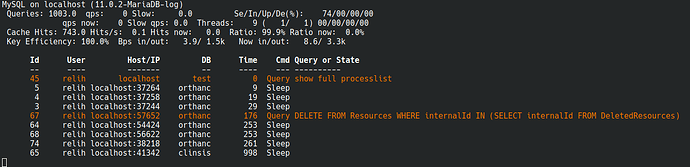

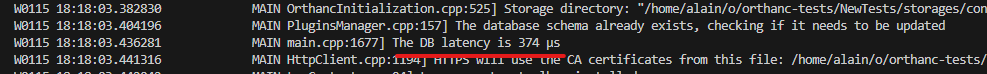

After investigating we found out that an image takes about 7 seconds to be processed. No resource outage is detected (atop/htop).

Network issue was discarded. We tried to send images connected almost directly to the server and issue persists.

Disks are two 12TB disks (7200 rpm) in RAID1.

Current OrthanC server has about 3.7 millions images, from 9,200 studies (mostly MR with “full details”). Maindicomtags table has about 93 millions records.

Database is Mariadb. Server Dell T140 running OpenSuse Tumbleweed.

I found out that issue is most probably due to bad database queries. These queries will probably be the same no matter the database used (MySQL, Postgres, Oracle…).

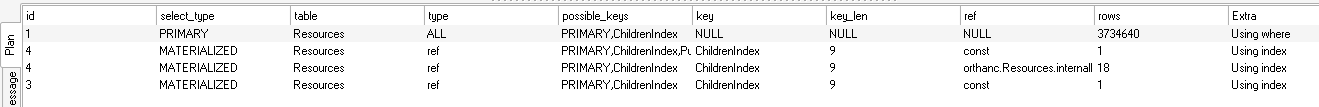

For example, on the “create temporary table” the explain plan show that a full table scan is performed on the resource table (>3 millions rows), and various “materialized” tables are created.

The “delete” based on the temporary table cause examined rows of more than 43 millions rows. Lots of IO’s.

Please note I did run the analyze table yesterday, so statistics are fine. I checked on another server, with less data, and explain plan is the same.

Using temporary tables should be avoided at all costs, since creation and deletion of the table consume lots of resources. Additionally, if temporary table has no index, and is used in other query, performance might be awful.

I am disposed to share my experience as DBA with you and help to better the performance of OrthanC if you wish.

In the meantime we will migrate the database to SSD drives, but this is just to reduce (and postpone) the problem. The root cause if the database queries.

Many thanks in advance.

Cedric Simon

Identified queries with lots of examined rows for few affected rows:

| rows_sent | rows_examined | db | sql_text | thread_id | rows_affected |

|---|---|---|---|---|---|

| 0 | 12648 | orthanc | CALL CreateInstance('0e651a19-8c8a0361-b139d495-dec7442a-f96… | 4 | 15 |

| 0 | 3714634 | orthanc | CREATE TEMPORARY TABLE DeletedResources SELECT * FROM (SELECT internalId, resourceType, publicId FROM Resources WHERE internal…. | 12 | 2 |

| 0 | 43322892 | orthanc | DELETE FROM Resources WHERE internalId IN (SELECT internalId FROM DeletedResources) | 11 | 2 |

| 0 | 55820 | orthanc | SELECT studies.publicId, MIN(instances.publicId) FROM (SELECT studies.publicId, studies.internalId FROM Resources AS studies INNER JOIN DicomIdentifiers t0 ON… | 4 | 0 |